从前文分析来看,不连续页面管理的初始化较为简单,现在分析一下具体的分配实现。

vmalloc内存申请函数入口为vmalloc():

【file:/mm/vmalloc.c】

/**

* vmalloc - allocate virtually contiguous memory

* @size: allocation size

* Allocate enough pages to cover @size from the page level

* allocator and map them into contiguous kernel virtual space.

*

* For tight control over page level allocator and protection flags

* use __vmalloc() instead.

*/

void *vmalloc(unsigned long size)

{

return __vmalloc_node_flags(size, NUMA_NO_NODE,

GFP_KERNEL | __GFP_HIGHMEM);

}

该函数简单地封装调用__vmalloc_node_flags()。__vmalloc_node_flags()的存在,主要是用于指定申请不连续内存页面所来源的node结点。

【file:/mm/vmalloc.c】

static inline void *__vmalloc_node_flags(unsigned long size,

int node, gfp_t flags)

{

return __vmalloc_node(size, 1, flags, PAGE_KERNEL,

node, __builtin_return_address(0));

}

__vmalloc_node_flags()从结点请求分配连续虚拟内存,而__vmalloc_node_flags()则是封装__vmalloc_node()。

【file:/mm/vmalloc.c】

/**

* __vmalloc_node - allocate virtually contiguous memory

* @size: allocation size

* @align: desired alignment

* @gfp_mask: flags for the page level allocator

* @prot: protection mask for the allocated pages

* @node: node to use for allocation or NUMA_NO_NODE

* @caller: caller's return address

*

* Allocate enough pages to cover @size from the page level

* allocator with @gfp_mask flags. Map them into contiguous

* kernel virtual space, using a pagetable protection of @prot.

*/

static void *__vmalloc_node(unsigned long size, unsigned long align,

gfp_t gfp_mask, pgprot_t prot,

int node, const void *caller)

{

return __vmalloc_node_range(size, align, VMALLOC_START, VMALLOC_END,

gfp_mask, prot, node, caller);

}

最终调用__vmalloc_node_range()函数,该函数才是vmalloc的具体实现。现在具体分析一下函数:

【file:/mm/vmalloc.c】

/**

* __vmalloc_node_range - allocate virtually contiguous memory

* @size: allocation size

* @align: desired alignment

* @start: vm area range start

* @end: vm area range end

* @gfp_mask: flags for the page level allocator

* @prot: protection mask for the allocated pages

* @node: node to use for allocation or NUMA_NO_NODE

* @caller: caller's return address

*

* Allocate enough pages to cover @size from the page level

* allocator with @gfp_mask flags. Map them into contiguous

* kernel virtual space, using a pagetable protection of @prot.

*/

void *__vmalloc_node_range(unsigned long size, unsigned long align,

unsigned long start, unsigned long end, gfp_t gfp_mask,

pgprot_t prot, int node, const void *caller)

{

struct vm_struct *area;

void *addr;

unsigned long real_size = size;

size = PAGE_ALIGN(size);

if (!size || (size >> PAGE_SHIFT) > totalram_pages)

goto fail;

area = __get_vm_area_node(size, align, VM_ALLOC | VM_UNINITIALIZED,

start, end, node, gfp_mask, caller);

if (!area)

goto fail;

addr = __vmalloc_area_node(area, gfp_mask, prot, node);

if (!addr)

return NULL;

/*

* In this function, newly allocated vm_struct has VM_UNINITIALIZED

* flag. It means that vm_struct is not fully initialized.

* Now, it is fully initialized, so remove this flag here.

*/

clear_vm_uninitialized_flag(area);

/*

* A ref_count = 2 is needed because vm_struct allocated in

* __get_vm_area_node() contains a reference to the virtual address of

* the vmalloc'ed block.

*/

kmemleak_alloc(addr, real_size, 2, gfp_mask);

return addr;

fail:

warn_alloc_failed(gfp_mask, 0,

"vmalloc: allocation failure: %lu bytes\n",

real_size);

return NULL;

}

该函数首先对申请内存的大小做对齐后,如果大小为0或者大于总内存,则返回失败;继而调用__get_vm_area_node()向内核请求一个空间大小相匹配的虚拟地址空间,返回管理信息结构vm_struct;而调用__vmalloc_area_node()将根据vm_struct的信息进行内存空间申请;接着通过clear_vm_uninitialized_flag()标示内存空间初始化;最后调用kmemleak_alloc()进行内存分配泄漏调测。

深入__get_vm_area_node()函数分析:

【file:/mm/vmalloc.c】

static struct vm_struct *__get_vm_area_node(unsigned long size,

unsigned long align, unsigned long flags, unsigned long start,

unsigned long end, int node, gfp_t gfp_mask, const void *caller)

{

struct vmap_area *va;

struct vm_struct *area;

BUG_ON(in_interrupt());

if (flags & VM_IOREMAP)

align = 1ul << clamp(fls(size), PAGE_SHIFT, IOREMAP_MAX_ORDER);

size = PAGE_ALIGN(size);

if (unlikely(!size))

return NULL;

area = kzalloc_node(sizeof(*area), gfp_mask & GFP_RECLAIM_MASK, node);

if (unlikely(!area))

return NULL;

/*

* We always allocate a guard page.

*/

size += PAGE_SIZE;

va = alloc_vmap_area(size, align, start, end, node, gfp_mask);

if (IS_ERR(va)) {

kfree(area);

return NULL;

}

setup_vmalloc_vm(area, va, flags, caller);

return area;

}

如果标记为VM_IOREMAP,表示它是用于特殊架构修正内存对齐;通过PAGE_ALIGN对内存进行对齐操作,如果申请的内存空间大小小于内存页面大小,那么将返回NULL;接着通过kzalloc_node()申请vmap_area结构空间;不连续内存页面的申请,将会新增一页内存作为保护页,继而调用alloc_vmap_area()申请指定的虚拟地址范围内的未映射空间,说白了就是申请不连续的物理内存;最后setup_vmalloc_vm()设置vm_struct和vmap_area收尾,用于将分配的虚拟地址空间信息返回出去。

而alloc_vmap_area()则是申请不连续内存页面的具体实现。

【file:/mm/vmalloc.c】

/*

* Allocate a region of KVA of the specified size and alignment, within the

* vstart and vend.

*/

static struct vmap_area *alloc_vmap_area(unsigned long size,

unsigned long align,

unsigned long vstart, unsigned long vend,

int node, gfp_t gfp_mask)

{

struct vmap_area *va;

struct rb_node *n;

unsigned long addr;

int purged = 0;

struct vmap_area *first;

BUG_ON(!size);

BUG_ON(size & ~PAGE_MASK);

BUG_ON(!is_power_of_2(align));

va = kmalloc_node(sizeof(struct vmap_area),

gfp_mask & GFP_RECLAIM_MASK, node);

if (unlikely(!va))

return ERR_PTR(-ENOMEM);

/*

* Only scan the relevant parts containing pointers to other objects

* to avoid false negatives.

*/

kmemleak_scan_area(&va->rb_node, SIZE_MAX, gfp_mask & GFP_RECLAIM_MASK);

retry:

spin_lock(&vmap_area_lock);

/*

* Invalidate cache if we have more permissive parameters.

* cached_hole_size notes the largest hole noticed _below_

* the vmap_area cached in free_vmap_cache: if size fits

* into that hole, we want to scan from vstart to reuse

* the hole instead of allocating above free_vmap_cache.

* Note that __free_vmap_area may update free_vmap_cache

* without updating cached_hole_size or cached_align.

*/

if (!free_vmap_cache ||

size < cached_hole_size ||

vstart < cached_vstart ||

align < cached_align) {

nocache:

cached_hole_size = 0;

free_vmap_cache = NULL;

}

/* record if we encounter less permissive parameters */

cached_vstart = vstart;

cached_align = align;

/* find starting point for our search */

if (free_vmap_cache) {

first = rb_entry(free_vmap_cache, struct vmap_area, rb_node);

addr = ALIGN(first->va_end, align);

if (addr < vstart)

goto nocache;

if (addr + size < addr)

goto overflow;

} else {

addr = ALIGN(vstart, align);

if (addr + size < addr)

goto overflow;

n = vmap_area_root.rb_node;

first = NULL;

while (n) {

struct vmap_area *tmp;

tmp = rb_entry(n, struct vmap_area, rb_node);

if (tmp->va_end >= addr) {

first = tmp;

if (tmp->va_start <= addr)

break;

n = n->rb_left;

} else

n = n->rb_right;

}

if (!first)

goto found;

}

/* from the starting point, walk areas until a suitable hole is found */

while (addr + size > first->va_start && addr + size <= vend) {

if (addr + cached_hole_size < first->va_start)

cached_hole_size = first->va_start - addr;

addr = ALIGN(first->va_end, align);

if (addr + size < addr)

goto overflow;

if (list_is_last(&first->list, &vmap_area_list))

goto found;

first = list_entry(first->list.next,

struct vmap_area, list);

}

found:

if (addr + size > vend)

goto overflow;

va->va_start = addr;

va->va_end = addr + size;

va->flags = 0;

__insert_vmap_area(va);

free_vmap_cache = &va->rb_node;

spin_unlock(&vmap_area_lock);

BUG_ON(va->va_start & (align-1));

BUG_ON(va->va_start < vstart);

BUG_ON(va->va_end > vend);

return va;

overflow:

spin_unlock(&vmap_area_lock);

if (!purged) {

purge_vmap_area_lazy();

purged = 1;

goto retry;

}

if (printk_ratelimit())

printk(KERN_WARNING

"vmap allocation for size %lu failed: "

"use vmalloc= to increase size.\n", size);

kfree(va);

return ERR_PTR(-EBUSY);

}

其通过kmalloc_node()申请vmap_area空间,仅使用GFP_RECLAIM_MASK标识;接着调用kmemleak_scan_area()将该内存空间添加扫描区域内的内存块中;加锁vmap_area_lock之后紧接着的条件判断中,如果free_vmap_cache为空,意味着是首次进行vmalloc内存分配,而cached_hole_size记录最大空洞空间大小,如果size小于最大空洞那么表示存在着可以复用的空洞,其余的则是cached_vstart起始位置和cached_align对齐大小的比较,只要最终条件判断结果为true的情况下,那么都将会自vmalloc空间起始去查找合适的内存空间进行分配;往下记录cached_vstart和cached_align的最小合适的参数。

继而判断free_vmap_cache是否为空,free_vmap_cache记录着最近释放的或最近注册使用的不连续内存页面空间,是用以加快空间的搜索速度的。如果free_vmap_cache不为空的情况下,将对申请的空间进行检查,当申请的内存空间超出范围将不使用cache,而当空间溢出时将直接跳转至overflow退出申请。如果free_vmap_cache为空的情况下,将先做溢出检验,接着循环查找vmap_area_root红黑树,尝试找到vstart附件已经分配出去的虚拟地址空间。若能找到的话,first将不为空,否则在first为空的情况下,表示vstart为起始的虚拟地址空间未被使用过,将会直接对该虚拟地址空间进行分配;若first不为空,意味着该空间曾经分配过,那么将会进入while分支进行处理,该循环是从first为起点遍历vmap_area_list链表管理的虚拟地址空间链表进行查找,如果找合适的未使用的虚拟地址空间或者遍历到了链表末尾,除非空间溢出,否则都表示找到了该空间。

找到了合适的虚拟地址空间后,对地址空间进行分配,并将分配信息记录到vmap_area结构中,最后将该管理结构通过__insert_vmap_area()插入到vmap_area_root红黑树中,以及vmap_area_list链表中。

至此虚拟地址空间分配完毕。

但是如果想要深入了解不连续内存页面的虚拟地址空间管理结构,就需要深入__insert_vmap_area()函数。

【file:/mm/vmalloc.c】

static void __insert_vmap_area(struct vmap_area *va)

{

struct rb_node **p = &vmap_area_root.rb_node;

struct rb_node *parent = NULL;

struct rb_node *tmp;

while (*p) {

struct vmap_area *tmp_va;

parent = *p;

tmp_va = rb_entry(parent, struct vmap_area, rb_node);

if (va->va_start < tmp_va->va_end)

p = &(*p)->rb_left;

else if (va->va_end > tmp_va->va_start)

p = &(*p)->rb_right;

else

BUG();

}

rb_link_node(&va->rb_node, parent, p);

rb_insert_color(&va->rb_node, &vmap_area_root);

/* address-sort this list */

tmp = rb_prev(&va->rb_node);

if (tmp) {

struct vmap_area *prev;

prev = rb_entry(tmp, struct vmap_area, rb_node);

list_add_rcu(&va->list, &prev->list);

} else

list_add_rcu(&va->list, &vmap_area_list);

}

分析该函数,while循环是对vmap_area_root红黑树进行查找,找到合适的位置后通过rb_link_node()插入红黑树,继而rb_insert_color()对红黑树进行平衡调整;最后根据插入的红黑树的父子节点关系插入到vmap_area_list链表中。由此逻辑,可以知道已分配的虚拟地址空间地址是通过红黑树根据地址空间的高低次序进行管理的,同时又通过链表将地址空间全部串联起来。

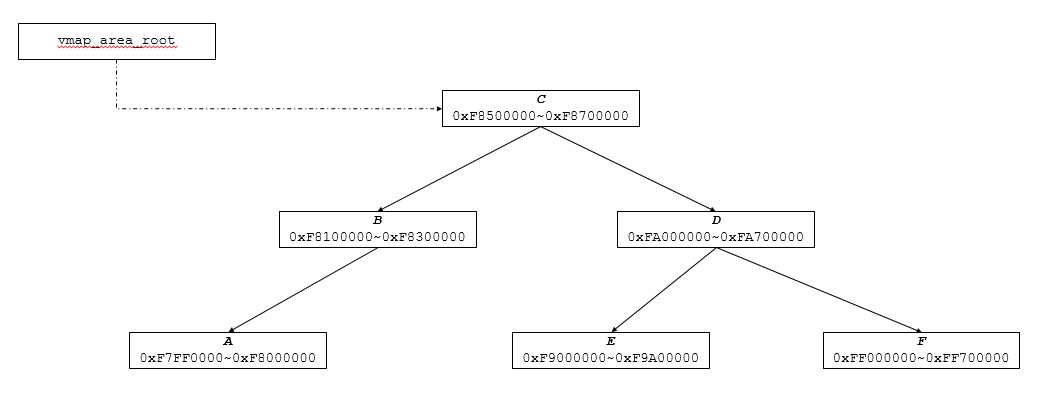

如下图则是红黑树管理的示意例图。

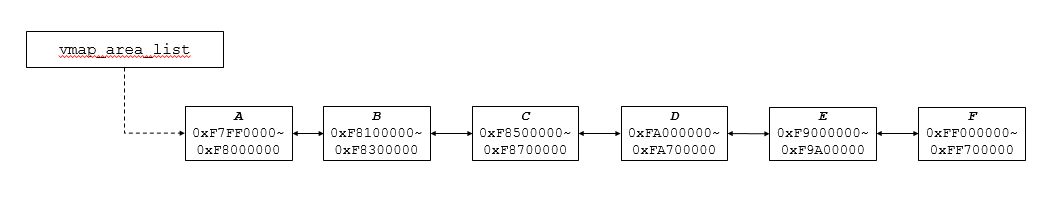

相对应红黑树的管理结构,其链表串联的情况则是下面这样的。

回到__vmalloc_node_range()函数实现中,看一下__vmalloc_area_node()实现。

【file:/mm/vmalloc.c】

static void *__vmalloc_area_node(struct vm_struct *area, gfp_t gfp_mask,

pgprot_t prot, int node)

{

const int order = 0;

struct page **pages;

unsigned int nr_pages, array_size, i;

gfp_t nested_gfp = (gfp_mask & GFP_RECLAIM_MASK) | __GFP_ZERO;

nr_pages = get_vm_area_size(area) >> PAGE_SHIFT;

array_size = (nr_pages * sizeof(struct page *));

area->nr_pages = nr_pages;

/* Please note that the recursion is strictly bounded. */

if (array_size > PAGE_SIZE) {

pages = __vmalloc_node(array_size, 1, nested_gfp|__GFP_HIGHMEM,

PAGE_KERNEL, node, area->caller);

area->flags |= VM_VPAGES;

} else {

pages = kmalloc_node(array_size, nested_gfp, node);

}

area->pages = pages;

if (!area->pages) {

remove_vm_area(area->addr);

kfree(area);

return NULL;

}

for (i = 0; i < area->nr_pages; i++) {

struct page *page;

gfp_t tmp_mask = gfp_mask | __GFP_NOWARN;

if (node == NUMA_NO_NODE)

page = alloc_page(tmp_mask);

else

page = alloc_pages_node(node, tmp_mask, order);

if (unlikely(!page)) {

/* Successfully allocated i pages, free them in __vunmap() */

area->nr_pages = i;

goto fail;

}

area->pages[i] = page;

}

if (map_vm_area(area, prot, &pages))

goto fail;

return area->addr;

fail:

warn_alloc_failed(gfp_mask, order,

"vmalloc: allocation failure, allocated %ld of %ld bytes\n",

(area->nr_pages*PAGE_SIZE), area->size);

vfree(area->addr);

return NULL;

}

该函数首先计算需要申请的内存空间页面数量nr_pages以及需要存储等量页面指针的数组空间大小,如果该数组所需内存空间超过单个页面的时候,将通过__vmalloc_node()申请,否则使用kmalloc_node()进行申请。如果存放页面管理的数组空间申请失败,则内存申请失败并对前面申请的虚拟空间还回。接着for循环主要是根据页面数量,循环申请内存页面空间。物理内存空间申请成功后,将通过map_vm_area()进行内存映射处理。

vmalloc不连续内存页面空间的申请分析完毕。