前面分析了slub分配算法的初始化,继续分析slub分配算法的slab创建过程。

Slub分配算法创建slab类型,其函数入口为kmem_cache_create(),具体实现:

【file:/mm/slab_common.c】

struct kmem_cache *

kmem_cache_create(const char *name, size_t size, size_t align,

unsigned long flags, void (*ctor)(void *))

{

return kmem_cache_create_memcg(NULL, name, size, align, flags, ctor, NULL);

}

该函数的入参name表示要创建的slab类型名称,size为该slab每个对象的大小,align则是其内存对齐的标准, flags则表示申请内存的标识,而ctor则是初始化每个对象的构造函数,至于实现则是简单地封装了kmem_cache_create_memcg()。

继而分析kmem_cache_create_memcg()的实现:

【file:/mm/slab_common.c】

/*

* kmem_cache_create - Create a cache.

* @name: A string which is used in /proc/slabinfo to identify this cache.

* @size: The size of objects to be created in this cache.

* @align: The required alignment for the objects.

* @flags: SLAB flags

* @ctor: A constructor for the objects.

*

* Returns a ptr to the cache on success, NULL on failure.

* Cannot be called within a interrupt, but can be interrupted.

* The @ctor is run when new pages are allocated by the cache.

*

* The flags are

*

* %SLAB_POISON - Poison the slab with a known test pattern (a5a5a5a5)

* to catch references to uninitialised memory.

*

* %SLAB_RED_ZONE - Insert `Red' zones around the allocated memory to check

* for buffer overruns.

*

* %SLAB_HWCACHE_ALIGN - Align the objects in this cache to a hardware

* cacheline. This can be beneficial if you're counting cycles as closely

* as davem.

*/

struct kmem_cache *

kmem_cache_create_memcg(struct mem_cgroup *memcg, const char *name, size_t size,

size_t align, unsigned long flags, void (*ctor)(void *),

struct kmem_cache *parent_cache)

{

struct kmem_cache *s = NULL;

int err;

get_online_cpus();

mutex_lock(&slab_mutex);

err = kmem_cache_sanity_check(memcg, name, size);

if (err)

goto out_unlock;

if (memcg) {

/*

* Since per-memcg caches are created asynchronously on first

* allocation (see memcg_kmem_get_cache()), several threads can

* try to create the same cache, but only one of them may

* succeed. Therefore if we get here and see the cache has

* already been created, we silently return NULL.

*/

if (cache_from_memcg_idx(parent_cache, memcg_cache_id(memcg)))

goto out_unlock;

}

/*

* Some allocators will constraint the set of valid flags to a subset

* of all flags. We expect them to define CACHE_CREATE_MASK in this

* case, and we'll just provide them with a sanitized version of the

* passed flags.

*/

flags &= CACHE_CREATE_MASK;

s = __kmem_cache_alias(memcg, name, size, align, flags, ctor);

if (s)

goto out_unlock;

err = -ENOMEM;

s = kmem_cache_zalloc(kmem_cache, GFP_KERNEL);

if (!s)

goto out_unlock;

s->object_size = s->size = size;

s->align = calculate_alignment(flags, align, size);

s->ctor = ctor;

s->name = kstrdup(name, GFP_KERNEL);

if (!s->name)

goto out_free_cache;

err = memcg_alloc_cache_params(memcg, s, parent_cache);

if (err)

goto out_free_cache;

err = __kmem_cache_create(s, flags);

if (err)

goto out_free_cache;

s->refcount = 1;

list_add(&s->list, &slab_caches);

memcg_register_cache(s);

out_unlock:

mutex_unlock(&slab_mutex);

put_online_cpus();

if (err) {

/*

* There is no point in flooding logs with warnings or

* especially crashing the system if we fail to create a cache

* for a memcg. In this case we will be accounting the memcg

* allocation to the root cgroup until we succeed to create its

* own cache, but it isn't that critical.

*/

if (!memcg)

return NULL;

if (flags & SLAB_PANIC)

panic("kmem_cache_create: Failed to create slab '%s'. Error %d\n",

name, err);

else {

printk(KERN_WARNING "kmem_cache_create(%s) failed with error %d",

name, err);

dump_stack();

}

return NULL;

}

return s;

out_free_cache:

memcg_free_cache_params(s);

kfree(s->name);

kmem_cache_free(kmem_cache, s);

goto out_unlock;

}

函数入口处调用的get_online_cpus()与put_online_cpus()是配对使用的,用于对cpu_online_map的加解锁;接下来的kmem_cache_sanity_check()主要是用于合法性检查,检查指定名称的slab是否已经创建,仅在CONFIG_DEBUG_VM开启的时候起作用;如果memcg不为空指针,表示创建的slab与memcg关联,此外由于每memcg的cache会在初始化分配的时候异步创建,多个线程将会尝试创建同样的cache,但只有一个会创建成功,那么如果代码执行到此处调用cache_from_memcg_idx()检查到cache已经被创建,那么cache_from_memcg_idx()将会返回NULL;再往下的__kmem_cache_alias(),该函数检查已创建的slab是否存在与当前想要创建的slab的对象大小相匹配的,如果有则通过别名合并到一个缓存中进行访问。

看一下__kmem_cache_alias()具体实现:

【file:/mm/slub.c】

struct kmem_cache *

__kmem_cache_alias(struct mem_cgroup *memcg, const char *name, size_t size,

size_t align, unsigned long flags, void (*ctor)(void *))

{

struct kmem_cache *s;

s = find_mergeable(memcg, size, align, flags, name, ctor);

if (s) {

s->refcount++;

/*

* Adjust the object sizes so that we clear

* the complete object on kzalloc.

*/

s->object_size = max(s->object_size, (int)size);

s->inuse = max_t(int, s->inuse, ALIGN(size, sizeof(void *)));

if (sysfs_slab_alias(s, name)) {

s->refcount--;

s = NULL;

}

}

return s;

}

该函数主要通过find_mergeable()查找可合并slab的kmem_cache结构,如果找到的情况下,将kmem_cache的引用计数作自增,同时更新kmem_cache的对象大小及元数据偏移量,最后调用sysfs_slab_alias()在sysfs中添加别号。

进一步分析find_mergeable()函数的具体实现:

【file:/mm/slub.c】

static struct kmem_cache *find_mergeable(struct mem_cgroup *memcg, size_t size,

size_t align, unsigned long flags, const char *name,

void (*ctor)(void *))

{

struct kmem_cache *s;

if (slub_nomerge || (flags & SLUB_NEVER_MERGE))

return NULL;

if (ctor)

return NULL;

size = ALIGN(size, sizeof(void *));

align = calculate_alignment(flags, align, size);

size = ALIGN(size, align);

flags = kmem_cache_flags(size, flags, name, NULL);

list_for_each_entry(s, &slab_caches, list) {

if (slab_unmergeable(s))

continue;

if (size > s->size)

continue;

if ((flags & SLUB_MERGE_SAME) != (s->flags & SLUB_MERGE_SAME))

continue;

/*

* Check if alignment is compatible.

* Courtesy of Adrian Drzewiecki

*/

if ((s->size & ~(align - 1)) != s->size)

continue;

if (s->size - size >= sizeof(void *))

continue;

if (!cache_match_memcg(s, memcg))

continue;

return s;

}

return NULL;

}

该查找函数先获取将要创建的slab的内存对齐值及创建slab的内存标识。接着经由list_for_each_entry()遍历整个slab_caches链表;通过slab_unmergeable()判断遍历的kmem_cache是否允许合并,主要依据主要是缓冲区属性的标识及slab的对象是否有特定的初始化构造函数,如果不允许合并则跳过;判断当前的kmem_cache的对象大小是否小于要查找的,是则跳过;再接着if ((flags & SLUB_MERGE_SAME) != (s->flags & SLUB_MERGE_SAME)) 判断当前的kmem_cache与查找的标识类型是否一致,不是则跳过;往下就是if ((s->size & ~(align – 1)) != s->size)判断对齐量是否匹配,if (s->size – size >= sizeof(void *))判断大小相差是否超过指针类型大小,if (!cache_match_memcg(s, memcg))判断memcg是否匹配。经由多层判断检验,如果找到可合并的slab,则返回回去,否则返回NULL。

回到kmem_cache_create_memcg(),如果__kmem_cache_alias()找到了可合并的slab,则将其kmem_cache结构返回。否则将会创建新的slab,其将通过kmem_cache_zalloc()申请一个kmem_cache结构对象,然后初始化该结构的对象大小、对齐值及对象的初始化构造函数等数据成员信息;其中slab的名称将通过kstrdup()申请空间并拷贝存储至空间中;接着的memcg_alloc_cache_params()主要是申请kmem_cache的memcg_params成员结构空间并初始化;至于往下的__kmem_cache_create()则主要是申请并创建slub的管理结构及kmem_cache其他数据的初始化,具体后面将进行详细分析。

接着往下的out_unlock标签主要是用于处理slab创建的收尾工作,如果创建失败,将会进入err分支进行失败处理;最后的out_free_cache标签主要是用于初始化kmem_cache失败时将申请的空间进行释放,然后跳转至out_unlock进行失败后处理。

具体看一下__kmem_cache_create()实现:

【file:/mm/slub.c】

int __kmem_cache_create(struct kmem_cache *s, unsigned long flags)

{

int err;

err = kmem_cache_open(s, flags);

if (err)

return err;

/* Mutex is not taken during early boot */

if (slab_state <= UP)

return 0;

memcg_propagate_slab_attrs(s);

mutex_unlock(&slab_mutex);

err = sysfs_slab_add(s);

mutex_lock(&slab_mutex);

if (err)

kmem_cache_close(s);

return err;

}

其中里面调用的kmem_cache_open()主要是初始化slub结构。而后在调用sysfs_slab_add()前会先解锁slab_mutex,这主要是因为sysfs函数会做大量的事情,为了避免调用sysfs函数中持有该锁从而导致阻塞等情况;而sysfs_slab_add()主要是将kmem_cache添加到sysfs。如果出错,将会通过kmem_cache_close()将slub销毁。

深入分析kmem_cache_open()实现:

【file:/mm/slub.c】

static int kmem_cache_open(struct kmem_cache *s, unsigned long flags)

{

s->flags = kmem_cache_flags(s->size, flags, s->name, s->ctor);

s->reserved = 0;

if (need_reserve_slab_rcu && (s->flags & SLAB_DESTROY_BY_RCU))

s->reserved = sizeof(struct rcu_head);

if (!calculate_sizes(s, -1))

goto error;

if (disable_higher_order_debug) {

/*

* Disable debugging flags that store metadata if the min slab

* order increased.

*/

if (get_order(s->size) > get_order(s->object_size)) {

s->flags &= ~DEBUG_METADATA_FLAGS;

s->offset = 0;

if (!calculate_sizes(s, -1))

goto error;

}

}

#if defined(CONFIG_HAVE_CMPXCHG_DOUBLE) && \

defined(CONFIG_HAVE_ALIGNED_STRUCT_PAGE)

if (system_has_cmpxchg_double() && (s->flags & SLAB_DEBUG_FLAGS) == 0)

/* Enable fast mode */

s->flags |= __CMPXCHG_DOUBLE;

#endif

/*

* The larger the object size is, the more pages we want on the partial

* list to avoid pounding the page allocator excessively.

*/

set_min_partial(s, ilog2(s->size) / 2);

/*

* cpu_partial determined the maximum number of objects kept in the

* per cpu partial lists of a processor.

*

* Per cpu partial lists mainly contain slabs that just have one

* object freed. If they are used for allocation then they can be

* filled up again with minimal effort. The slab will never hit the

* per node partial lists and therefore no locking will be required.

*

* This setting also determines

*

* A) The number of objects from per cpu partial slabs dumped to the

* per node list when we reach the limit.

* B) The number of objects in cpu partial slabs to extract from the

* per node list when we run out of per cpu objects. We only fetch

* 50% to keep some capacity around for frees.

*/

if (!kmem_cache_has_cpu_partial(s))

s->cpu_partial = 0;

else if (s->size >= PAGE_SIZE)

s->cpu_partial = 2;

else if (s->size >= 1024)

s->cpu_partial = 6;

else if (s->size >= 256)

s->cpu_partial = 13;

else

s->cpu_partial = 30;

#ifdef CONFIG_NUMA

s->remote_node_defrag_ratio = 1000;

#endif

if (!init_kmem_cache_nodes(s))

goto error;

if (alloc_kmem_cache_cpus(s))

return 0;

free_kmem_cache_nodes(s);

error:

if (flags & SLAB_PANIC)

panic("Cannot create slab %s size=%lu realsize=%u "

"order=%u offset=%u flags=%lx\n",

s->name, (unsigned long)s->size, s->size,

oo_order(s->oo), s->offset, flags);

return -EINVAL;

}

这里面的kmem_cache_flags()用于获取设置缓存描述的标识,用于区分slub是否开启了调试;继而调用calculate_sizes()计算并初始化kmem_cache结构的各项数据。

具体calculate_sizes()实现:

【file:/mm/slub.c】

/*

* calculate_sizes() determines the order and the distribution of data within

* a slab object.

*/

static int calculate_sizes(struct kmem_cache *s, int forced_order)

{

unsigned long flags = s->flags;

unsigned long size = s->object_size;

int order;

/*

* Round up object size to the next word boundary. We can only

* place the free pointer at word boundaries and this determines

* the possible location of the free pointer.

*/

size = ALIGN(size, sizeof(void *));

#ifdef CONFIG_SLUB_DEBUG

/*

* Determine if we can poison the object itself. If the user of

* the slab may touch the object after free or before allocation

* then we should never poison the object itself.

*/

if ((flags & SLAB_POISON) && !(flags & SLAB_DESTROY_BY_RCU) &&

!s->ctor)

s->flags |= __OBJECT_POISON;

else

s->flags &= ~__OBJECT_POISON;

/*

* If we are Redzoning then check if there is some space between the

* end of the object and the free pointer. If not then add an

* additional word to have some bytes to store Redzone information.

*/

if ((flags & SLAB_RED_ZONE) && size == s->object_size)

size += sizeof(void *);

#endif

/*

* With that we have determined the number of bytes in actual use

* by the object. This is the potential offset to the free pointer.

*/

s->inuse = size;

if (((flags & (SLAB_DESTROY_BY_RCU | SLAB_POISON)) ||

s->ctor)) {

/*

* Relocate free pointer after the object if it is not

* permitted to overwrite the first word of the object on

* kmem_cache_free.

*

* This is the case if we do RCU, have a constructor or

* destructor or are poisoning the objects.

*/

s->offset = size;

size += sizeof(void *);

}

#ifdef CONFIG_SLUB_DEBUG

if (flags & SLAB_STORE_USER)

/*

* Need to store information about allocs and frees after

* the object.

*/

size += 2 * sizeof(struct track);

if (flags & SLAB_RED_ZONE)

/*

* Add some empty padding so that we can catch

* overwrites from earlier objects rather than let

* tracking information or the free pointer be

* corrupted if a user writes before the start

* of the object.

*/

size += sizeof(void *);

#endif

/*

* SLUB stores one object immediately after another beginning from

* offset 0. In order to align the objects we have to simply size

* each object to conform to the alignment.

*/

size = ALIGN(size, s->align);

s->size = size;

if (forced_order >= 0)

order = forced_order;

else

order = calculate_order(size, s->reserved);

if (order < 0)

return 0;

s->allocflags = 0;

if (order)

s->allocflags |= __GFP_COMP;

if (s->flags & SLAB_CACHE_DMA)

s->allocflags |= GFP_DMA;

if (s->flags & SLAB_RECLAIM_ACCOUNT)

s->allocflags |= __GFP_RECLAIMABLE;

/*

* Determine the number of objects per slab

*/

s->oo = oo_make(order, size, s->reserved);

s->min = oo_make(get_order(size), size, s->reserved);

if (oo_objects(s->oo) > oo_objects(s->max))

s->max = s->oo;

return !!oo_objects(s->oo);

}

最前面的ALIGN(size, sizeof(void *))是用于将slab对象的大小舍入对与sizeof(void *)指针大小对齐,其为了能够将空闲指针存放至对象的边界中;如果开启CONFIG_SLUB_DEBUG配置的情况下,接下来的if ((flags & SLAB_POISON) && !(flags & SLAB_DESTROY_BY_RCU) && !s->ctor)判断则为了判断用户是否会在对象释放后或者申请前访问,以设定SLUB的调试功能是否使能,也就是决定了对poison对象是否进行修改操作,其主要是为了通过将对象填充入特定的字符数据以实现对内存写越界进行调测,其填入的字符有:

#define POISON_INUSE 0x5a /* for use-uninitialised poisoning */

#define POISON_FREE 0x6b /* for use-after-free poisoning */

#define POISON_END 0xa5 /* end-byte of poisoning */

再接着的if ((flags & SLAB_RED_ZONE) && size == s->object_size)检验同样用于调测,其主要是在对象前后设置RedZone信息,通过检查该信息以扑捉Buffer溢出的问题;然后设置kmem_cache的inuse成员以表示元数据的偏移量,同时表示对象实际使用的大小,也意味着对象与空闲对象指针之间的可能偏移量;接着往下的if (((flags & (SLAB_DESTROY_BY_RCU | SLAB_POISON)) || s->ctor))判断是否允许对象写越界,如果不允许则重定位空闲对象指针到对象的末尾,并设置kmem_cache结构的offset(即对象指针的偏移),同时调整size为包含空闲对象指针。

同样在开启了CONFIG_SLUB_DEBUG配置的情况下,如果设置了SLAB_STORE_USER标识,将会在对象末尾加上两个track的空间大小,用于记录该对象的使用轨迹信息(分别是申请和释放的信息)。具体会记录什么,可以看一下track的结构定义;此外如果设置了SLAB_RED_ZONE,将会新增空白边界,主要是用于破获内存写越界信息,目的是与其任由其越界破坏了空闲对象指针或者内存申请释放轨迹信息,倒不如捕获内存写越界信息。

再往下则是根据前面统计的size做对齐操作并更新到kmem_cache结构中;然后根据调用时的入参forced_order为-1,其将通过calculate_order()计算单slab的页框阶数,同时得出kmem_cache结构的oo、min、max等相关信息。

着重分析一下calculate_order():

【file:/mm/slub.c】

static inline int calculate_order(int size, int reserved)

{

int order;

int min_objects;

int fraction;

int max_objects;

/*

* Attempt to find best configuration for a slab. This

* works by first attempting to generate a layout with

* the best configuration and backing off gradually.

*

* First we reduce the acceptable waste in a slab. Then

* we reduce the minimum objects required in a slab.

*/

min_objects = slub_min_objects;

if (!min_objects)

min_objects = 4 * (fls(nr_cpu_ids) + 1);

max_objects = order_objects(slub_max_order, size, reserved);

min_objects = min(min_objects, max_objects);

while (min_objects > 1) {

fraction = 16;

while (fraction >= 4) {

order = slab_order(size, min_objects,

slub_max_order, fraction, reserved);

if (order <= slub_max_order)

return order;

fraction /= 2;

}

min_objects--;

}

/*

* We were unable to place multiple objects in a slab. Now

* lets see if we can place a single object there.

*/

order = slab_order(size, 1, slub_max_order, 1, reserved);

if (order <= slub_max_order)

return order;

/*

* Doh this slab cannot be placed using slub_max_order.

*/

order = slab_order(size, 1, MAX_ORDER, 1, reserved);

if (order < MAX_ORDER)

return order;

return -ENOSYS;

}

其主要是计算每个slab所需页面的阶数。经判断来自系统参数的最少对象数slub_min_objects是否已经配置,否则将会通过处理器数nr_cpu_ids计算最小对象数;同时通过order_objects()计算最高阶下,slab对象最多个数,最后取得最小值min_objects;接着通过两个while循环,分别对min_objects及fraction进行调整,通过slab_order()计算找出最佳的阶数,其中fraction用来表示slab内存未使用率的指标,值越大表示允许的未使用内存越少,也就是说不断调整单个slab的对象数以及降低碎片指标,由此找到一个最佳值。

如果对象个数及内存未使用率指标都调整到最低了仍得不到最佳阶值时,将尝试一个slab仅放入单个对象,由此计算出的order不大于slub_max_order,则将该值返回;如果order大于slub_max_order,则不得不尝试将阶数值调整至最大值MAX_ORDER,以期得到结果;如果仍未得结果,那么将返回失败。

末尾看一下slab_order()的实现:

【file:/mm/slub.c】

/*

* Calculate the order of allocation given an slab object size.

*

* The order of allocation has significant impact on performance and other

* system components. Generally order 0 allocations should be preferred since

* order 0 does not cause fragmentation in the page allocator. Larger objects

* be problematic to put into order 0 slabs because there may be too much

* unused space left. We go to a higher order if more than 1/16th of the slab

* would be wasted.

*

* In order to reach satisfactory performance we must ensure that a minimum

* number of objects is in one slab. Otherwise we may generate too much

* activity on the partial lists which requires taking the list_lock. This is

* less a concern for large slabs though which are rarely used.

*

* slub_max_order specifies the order where we begin to stop considering the

* number of objects in a slab as critical. If we reach slub_max_order then

* we try to keep the page order as low as possible. So we accept more waste

* of space in favor of a small page order.

*

* Higher order allocations also allow the placement of more objects in a

* slab and thereby reduce object handling overhead. If the user has

* requested a higher mininum order then we start with that one instead of

* the smallest order which will fit the object.

*/

static inline int slab_order(int size, int min_objects,

int max_order, int fract_leftover, int reserved)

{

int order;

int rem;

int min_order = slub_min_order;

if (order_objects(min_order, size, reserved) > MAX_OBJS_PER_PAGE)

return get_order(size * MAX_OBJS_PER_PAGE) - 1;

for (order = max(min_order,

fls(min_objects * size - 1) - PAGE_SHIFT);

order <= max_order; order++) {

unsigned long slab_size = PAGE_SIZE << order;

if (slab_size < min_objects * size + reserved)

continue;

rem = (slab_size - reserved) % size;

if (rem <= slab_size / fract_leftover)

break;

}

return order;

}

该函数入参size表示对象大小,min_objects为最小对象量,max_order为最高阶,fract_leftover表示slab的内存未使用率,而reserved则表示slab的保留空间大小。内存页面存储对象个数使用的objects是u15的长度,故其最多可存储个数为MAX_OBJS_PER_PAGE,即32767。所以如果order_objects()以min_order换算内存大小剔除reserved后,通过size求得的对象个数大于MAX_OBJS_PER_PAGE,则改为MAX_OBJS_PER_PAGE进行求阶。如果对象大小较大时,页面容纳的数量小于MAX_OBJS_PER_PAGE,那么通过for循环,调整阶数以期找到一个能够容纳该大小最少对象数量及其保留空间的并且内存的使用率满足条件的阶数。

末了回到kmem_cache_open()函数中继续查看其剩余的初始化动作。

其会继续初始化slub结构,set_min_partial()是用于设置partial链表的最小值,主要是由于对象的大小越大,则需挂入的partial链表的页面则容易越多,设置最小值是为了避免过度使用页面分配器造成冲击。再往下的多个if-else if判断赋值主要是根据对象的大小以及配置的情况,对cpu_partial进行设置;cpu_partial表示的是每个CPU在partial链表中的最多对象个数,该数据决定了:1)当使用到了极限时,每个CPU的partial slab释放到每个管理节点链表的个数;2)当使用完每个CPU的对象数时,CPU的partial slab来自每个管理节点的对象数。

kmem_cache_open()函数中接着往下的是init_kmem_cache_nodes():

【file:/mm/slub.c】

static int init_kmem_cache_nodes(struct kmem_cache *s)

{

int node;

for_each_node_state(node, N_NORMAL_MEMORY) {

struct kmem_cache_node *n;

if (slab_state == DOWN) {

early_kmem_cache_node_alloc(node);

continue;

}

n = kmem_cache_alloc_node(kmem_cache_node,

GFP_KERNEL, node);

if (!n) {

free_kmem_cache_nodes(s);

return 0;

}

s->node[node] = n;

init_kmem_cache_node(n);

}

return 1;

}

该函数通过for_each_node_state遍历每个管理节点,并向kmem_cache_node全局管理控制块为所遍历的节点申请一个kmem_cache_node结构空间对象,并将kmem_cache的s内的成员node初始化。

值得注意的是slab_state如果是DOWN状态,表示slub分配器还没有初始化完毕,意味着kmem_cache_node结构空间对象的cache还没建立,暂时无法进行对象分配,此时将会通过early_kmem_cache_node_alloc()进行kmem_cache_node对象的slab进行创建。这里补充说明一下:这是是slub分配算法初始化才会进入到的分支,即mm_init()->kmem_cache_init()->create_boot_cache()->create_boot_cache(kmem_cache_node, “kmem_cache_node”,sizeof(struct kmem_cache_node), SLAB_HWCACHE_ALIGN)-> __kmem_cache_create()->kmem_cache_open()->init_kmem_cache_nodes()->early_kmem_cache_node_alloc()该流程才会进入到early_kmem_cache_node_alloc()该函数执行,然后执行完了在kmem_cache_init()调用完create_boot_cache()及register_hotmemory_notifier()随即将slab_state设置为PARTIAL表示已经可以分配kmem_cache_node。

此外,如果已经创建了kmem_cache_node的slab,则将会通过kmem_cache_alloc_node()从初始化好的kmem_cache_node申请一空闲对象。

现在对前期的缺失进行补全分析,进入early_kmem_cache_node_alloc(),分析一下其实现:

【file:/mm/slub.c】

/*

* No kmalloc_node yet so do it by hand. We know that this is the first

* slab on the node for this slabcache. There are no concurrent accesses

* possible.

*

* Note that this function only works on the kmem_cache_node

* when allocating for the kmem_cache_node. This is used for bootstrapping

* memory on a fresh node that has no slab structures yet.

*/

static void early_kmem_cache_node_alloc(int node)

{

struct page *page;

struct kmem_cache_node *n;

BUG_ON(kmem_cache_node->size < sizeof(struct kmem_cache_node));

page = new_slab(kmem_cache_node, GFP_NOWAIT, node);

BUG_ON(!page);

if (page_to_nid(page) != node) {

printk(KERN_ERR "SLUB: Unable to allocate memory from "

"node %d\n", node);

printk(KERN_ERR "SLUB: Allocating a useless per node structure "

"in order to be able to continue\n");

}

n = page->freelist;

BUG_ON(!n);

page->freelist = get_freepointer(kmem_cache_node, n);

page->inuse = 1;

page->frozen = 0;

kmem_cache_node->node[node] = n;

#ifdef CONFIG_SLUB_DEBUG

init_object(kmem_cache_node, n, SLUB_RED_ACTIVE);

init_tracking(kmem_cache_node, n);

#endif

init_kmem_cache_node(n);

inc_slabs_node(kmem_cache_node, node, page->objects);

/*

* No locks need to be taken here as it has just been

* initialized and there is no concurrent access.

*/

__add_partial(n, page, DEACTIVATE_TO_HEAD);

}

该函数将先通过new_slab()创建kmem_cache_node结构空间对象的slab,如果创建的slab不在对应的内存节点中,则通过printk输出调试信息;接着向创建的slab取出一个对象,并根据CONFIG_SLUB_DEBUG配置对对象进行初始化(init_object()对数据区和RedZone进行标识,同时init_tracking()记录轨迹信息),然后inc_slabs_node()更新统计信息;最后将slab添加到partial链表中。

而进一步分析new_slab()的实现:

【file:/mm/slub.c】

static struct page *new_slab(struct kmem_cache *s, gfp_t flags, int node)

{

struct page *page;

void *start;

void *last;

void *p;

int order;

BUG_ON(flags & GFP_SLAB_BUG_MASK);

page = allocate_slab(s,

flags & (GFP_RECLAIM_MASK | GFP_CONSTRAINT_MASK), node);

if (!page)

goto out;

order = compound_order(page);

inc_slabs_node(s, page_to_nid(page), page->objects);

memcg_bind_pages(s, order);

page->slab_cache = s;

__SetPageSlab(page);

if (page->pfmemalloc)

SetPageSlabPfmemalloc(page);

start = page_address(page);

if (unlikely(s->flags & SLAB_POISON))

memset(start, POISON_INUSE, PAGE_SIZE << order);

last = start;

for_each_object(p, s, start, page->objects) {

setup_object(s, page, last);

set_freepointer(s, last, p);

last = p;

}

setup_object(s, page, last);

set_freepointer(s, last, NULL);

page->freelist = start;

page->inuse = page->objects;

page->frozen = 1;

out:

return page;

}

首先通过allocate_slab()申请一个slab块,继而通过compound_order()从该slab的首个page结构中获取其占用页面的order信息,然后inc_slabs_node()更新内存管理节点的slab统计信息,而memcg_bind_pages()则是更新内存cgroup的页面信息;再接下来page_address()获取页面的虚拟地址,然后根据SLAB_POISON标识以确定是否memset()该slab的空间;最后则是for_each_object()遍历每一个对象,通过setup_object()初始化对象信息以及set_freepointer()设置空闲页面指针,最终将slab初始完毕。

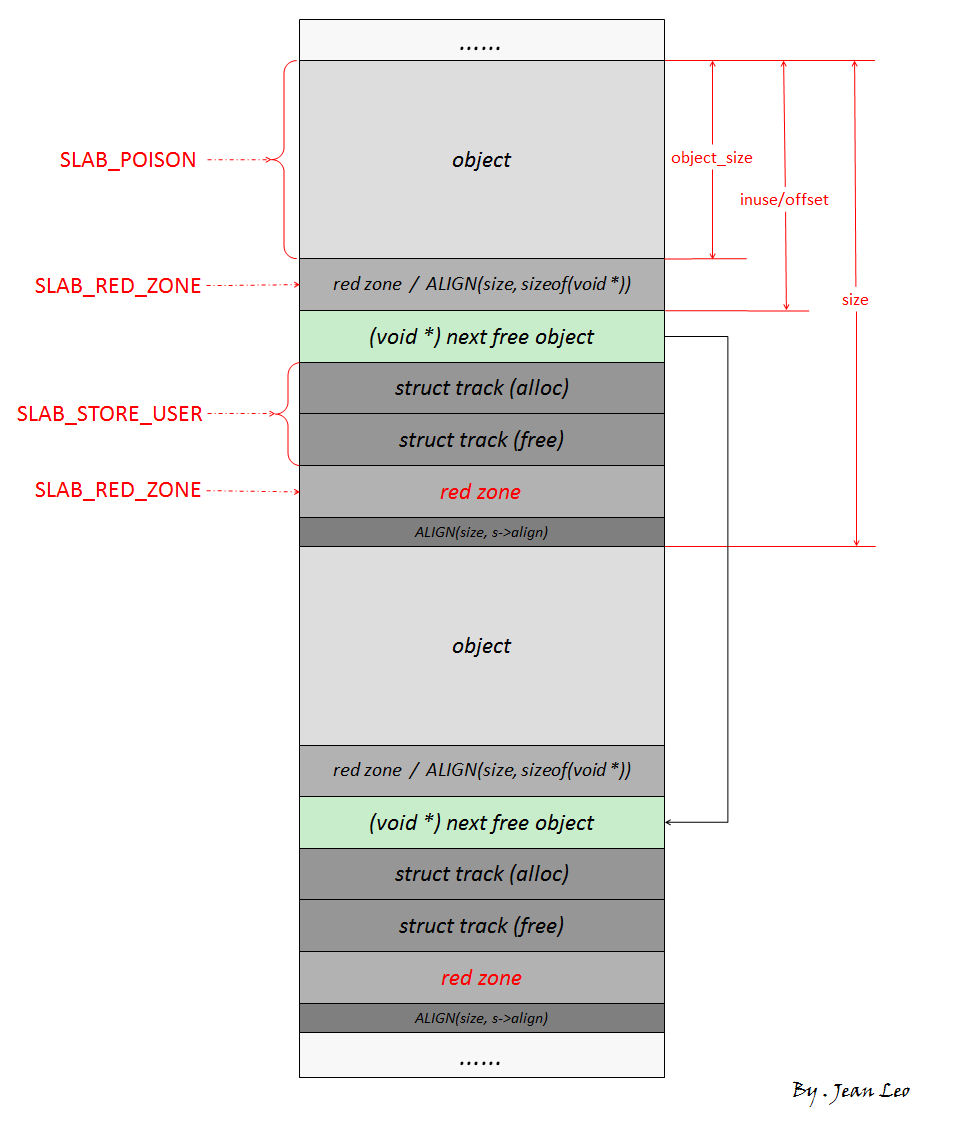

结合初始化信息,可以总结出当创建的slab中对象在所有配置启用时的对象结构信息如图:

正如前面文章中对kmem_cache结构的成员解析,object_size是slab对象的实际大小, inuse为元数据的偏移量(也表示对象实际使用大小),而offset为存放空闲对象指针的偏移;inuse和offset在图中显示是相等的,但是并非完全如此,inuse是必然有值的,而offset则是看情况了;至于结构体中的size成员表示的则是整个对象的大小。SLAB_POISON设置项仅是对对象object_size的大小做标识,而SLAB_RED_ZONE则是对象与空闲对象指针相距的空间做标识,至于track信息则是在空闲对象指针之后了。BTW,在track之后,根据SLAB_RED_ZONE的设置,新增了一块sizeof(void *)大小的空间好像并未被使用,可能代码看得不够细,后续再细细斟酌一番。

回到代码往下,继续allocate_slab()函数的实现:

【file:/mm/slub.c】

static struct page *allocate_slab(struct kmem_cache *s, gfp_t flags, int node)

{

struct page *page;

struct kmem_cache_order_objects oo = s->oo;

gfp_t alloc_gfp;

flags &= gfp_allowed_mask;

if (flags & __GFP_WAIT)

local_irq_enable();

flags |= s->allocflags;

/*

* Let the initial higher-order allocation fail under memory pressure

* so we fall-back to the minimum order allocation.

*/

alloc_gfp = (flags | __GFP_NOWARN | __GFP_NORETRY) & ~__GFP_NOFAIL;

page = alloc_slab_page(alloc_gfp, node, oo);

if (unlikely(!page)) {

oo = s->min;

/*

* Allocation may have failed due to fragmentation.

* Try a lower order alloc if possible

*/

page = alloc_slab_page(flags, node, oo);

if (page)

stat(s, ORDER_FALLBACK);

}

if (kmemcheck_enabled && page

&& !(s->flags & (SLAB_NOTRACK | DEBUG_DEFAULT_FLAGS))) {

int pages = 1 << oo_order(oo);

kmemcheck_alloc_shadow(page, oo_order(oo), flags, node);

/*

* Objects from caches that have a constructor don't get

* cleared when they're allocated, so we need to do it here.

*/

if (s->ctor)

kmemcheck_mark_uninitialized_pages(page, pages);

else

kmemcheck_mark_unallocated_pages(page, pages);

}

if (flags & __GFP_WAIT)

local_irq_disable();

if (!page)

return NULL;

page->objects = oo_objects(oo);

mod_zone_page_state(page_zone(page),

(s->flags & SLAB_RECLAIM_ACCOUNT) ?

NR_SLAB_RECLAIMABLE : NR_SLAB_UNRECLAIMABLE,

1 << oo_order(oo));

return page;

}

如果申请slab所需页面设置__GFP_WAIT标志,表示运行等待,则将local_irq_enable()将中断使能;接着将尝试使用常规的s->oo配置进行alloc_slab_page()内存页面申请。如果申请失败,则将其调至s->min进行降阶再次尝试申请;如果申请成功,同时kmemcheck调测功能开启(kmemcheck_enabled为true)且kmem_cache的flags未标识SLAB_NOTRACK 或DEBUG_DEFAULT_FLAGS,将会进行kmemcheck内存检测的初始化设置。接着根据flags的__GFP_WAIT标识与否将中断功能禁用。最后通过mod_zone_page_state计算更新内存管理区的状态统计。

其中的alloc_slab_page()则是通过Buddy伙伴算法进行内存分配:

【file:/mm/slub.c】

/*

* Slab allocation and freeing

*/

static inline struct page *alloc_slab_page(gfp_t flags, int node,

struct kmem_cache_order_objects oo)

{

int order = oo_order(oo);

flags |= __GFP_NOTRACK;

if (node == NUMA_NO_NODE)

return alloc_pages(flags, order);

else

return alloc_pages_exact_node(node, flags, order);

}

伙伴算法就不再做重复讲解了。

回到kmem_cache_open()函数,在init_kmem_cache_nodes()之后,如果初始化成功,则将会继而调用alloc_kmem_cache_cpus():

【file:/mm/slub.c】

static inline int alloc_kmem_cache_cpus(struct kmem_cache *s)

{

BUILD_BUG_ON(PERCPU_DYNAMIC_EARLY_SIZE <

KMALLOC_SHIFT_HIGH * sizeof(struct kmem_cache_cpu));

/*

* Must align to double word boundary for the double cmpxchg

* instructions to work; see __pcpu_double_call_return_bool().

*/

s->cpu_slab = __alloc_percpu(sizeof(struct kmem_cache_cpu),

2 * sizeof(void *));

if (!s->cpu_slab)

return 0;

init_kmem_cache_cpus(s);

return 1;

}

该函数主要通过__alloc_percpu()为每个CPU申请空间,然后通过init_kmem_cache_cpus()将申请空间初始化至每个CPU上。

【file:/mm/slub.c】

static void init_kmem_cache_cpus(struct kmem_cache *s)

{

int cpu;

for_each_possible_cpu(cpu)

per_cpu_ptr(s->cpu_slab, cpu)->tid = init_tid(cpu);

}

至此,slub算法中的缓冲区创建分析完毕。